Thanks to Vlad Brown and Drawings Team for Russian and Uzbek translations.

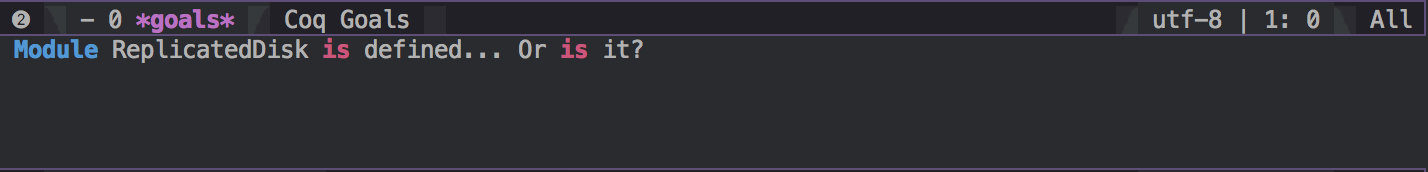

In Fall 2017, I took MIT’s 6.826, Principles of Computer Systems, taught by Turing Award-winner Butler Lampson, Nickolai Zeldovich, and Frans Kaashoek. Despite its rudimentary title, it’s a grad class on building formally verified systems. Using the proof language Coq, we wrote specifications, implementations, and proofs of toy structures: a remapped disk, an atomic pair of blocks, and a replicated disk. We also read quite a few papers from the state-of-the-art in formal methods.

I went into the class believing that formal verification is the future — the only solution to a world of software ridden with bugs and security issues. But after recent events and a semester of trying to apply formal methods, I’m a serious skeptic. In this post, I’ll discuss why I think formal verification has a long way to go — and why it just doesn’t work right now.

Try it — I dare you

First, let’s talk about how we get a system that we can rubber-stamp as “formally verified”.

Simulation proofs

There are two broad ways to write a formally verified system. The first, the more conventional way, involves carefully constructing specifications of the behavior of a system, an implementation of the system, and then manually writing proofs that the implementation matches the spec. All of this is written in a theorem-proving language like Coq, and then extracted to OCaml or Haskell for a runnable implementation.

From personal experience with 6.826 labs, this is a nightmare.

First, the proof burden is huge — for MIT’s FSCQ file system, developed over about 1.5 years using Coq, the complete system was 10x more code than a similar unverified filesystem. Imagine that — 2000 lines of implementation become 20,000 lines of proof! This is partly because Coq is a very general language for reasoning about mathematical logic, and it has little built-in machinery for specialized applications such as complex computer systems. So, we need to build infrastructure from scratch, as well as define our systems from the ground up — from bits and bytes into entire disks.

The labs were largely spared this ridiculous proof burden due to a lot of automation and infrastructure provided by the instructors. Indeed, 2700 lines of Coq (LoC) are devoted to proof infrastructure — and, in the case of the toy replicated disk lab, another 1500 lines for the actual system.

Where does all this overhead come from? Well, we need to support what’s called a “simulation proof”. In this proof style, we step through every procedure in our system and show that every reachable state in our implementation has a corresponding state in our spec. Our spec for each procedure contains three conditions — a precondition, a postcondition, and a crash condition that is true if our code suddenly crashes. Then, our proof involves several things:

- Establish that the precondition of our procedure holds

- Prove every line/branch of the procedure is a valid transition

- If there are no crashes at the end of execution, prove the postcondition holds

- If crashes happened before the end of execution, then prove the crash condition holds before each crash, the crash recovery procedure is a valid transition, and the postcondition ends up holding

Here’s a diagram of a single transition, showing the correspondence between a code state and a spec state. The double arrows are what we need to prove.

1 | spec semantics |

In the FSCQ code, 6000 of 32,000 LoC were devoted to just this infrastructure.

I want to highlight that we need to prove every possible code path — exponentially many in the number of conditional statements, and doubled by considering a crash at every point. Loops need to be proved inductively. On top of all that, if any spec or implementation changes even slightly, all the specs, impls, and proofs that depend on it will change as well. This makes even small changes a huge pain.

Is there any other way, you ask, before you shoot yourself?

Push-button proofs

Another way is the “push-button” style, which formulates the spec and implementation states as symbolic SMT equations that can be passed to a solver, such as Z3. This allows Z3 to automatically verify the system, without writing any manual proofs. Z3 might, for example, check whether each code operation satisfies a formula defining the relationship between code and spec states.

The main effort here is cleverly designing a set of verifiable specs that is actually scalable. It’s hard to determine whether a problem is tractable for Z3, and you have to play all sorts of tricks to make it work. For example, the writers of Yggdrasil, a push-button verified filesystem, spent 4 months exploring ways to scale verification, 2-3 months building their system, and at least 6 more months experimenting with optimizations. In the end, among other wild tricks, they rely on a stack of five layered abstractions, so the solver only has to reason about one layer at a time and won’t get stuck.

What goes wrong?

After all this effort, either carefully designing specs that are amenable to push-button verification, or tediously writing proofs in Coq, what do we get? You’d hope for perfect code, but the truth is a lot less palatable.

The guarantees of a formally verified system rest entirely on the assumptions of the system, including its trusted computing base (TCB). The TCB of a verified system includes the specs, which are manually written and hoped (fingers crossed!) to be correct, the verification tools (e.g., Coq engine, Z3, compiler), and the runtime infrastructure (OS, hardware). This should be a huge red flag. For instance, if you’ve used Z3, you’ll agree that it’s black magic to everyone except the developers, and my professors confess that they’ve found correctness bugs, especially in decidability theories that are not commonly used.

An empirical study

One of the papers we read, An Empirical Study on the Correctness of Formally Verified Distributed Systems, thoroughly analyzes three recent formally-verified distributed systems — two were Coq/OCaml-based, and a third was Dafny/SMT-based. The abstract says it all:

Through code review and testing, we found a total of 16 bugs, many of which produce serious consequences, including crashing servers, returning incorrect results to clients, and invalidating verification guarantees. These bugs were caused by violations of a wide-range of assumptions on which the verified components relied.

Among other serious consequences were command injection and data loss, in supposedly formally verified systems!

I want to discuss some of these bugs, including where and how they were found.

Most of these bugs were found through static analysis of the specs and implementations, as well as conventional debugging and network and file system fuzzing. These bugs generally occurred at the interfaces between verified and unverified components.

11 occurred at what the authors call the shim layer, the OS interface that includes system calls and other primitives. When the verified components do not exactly model the real-world OS implementation, serious bugs arise. For instance, not escaping metacharacters or not handling all possible syscall error codes caused incorrect results, leading to command injection and data loss. Real-world resource limits, such as too-large packets or stack exhaustion, also broke assumptions and crashed or hung the distributed system.

The remaining bugs were due to incomplete or incorrect specs, and critical issues with the verification tools themselves. In particular, these provers were not fail-safe — SIGINTs, exceptions, or other verifier crashes would cause the prover to report that verification succeeded!

As if that’s not concerning enough, there’s two recent headlines in which supposedly formally verified systems had alarming bugs, in one case with disastrous security consequences.

KRACK and CompCert

Big news back in October was the KRACK attack on WPA2-protected WiFi networks. Cryptographer Matt Green sums it up nicely. The two parts of WPA2 — the 4-way handshake and the encryption protocol — have security proofs; the 4-way handshake was even formally verified in 2005! However, no one reasoned about how these two parts interacted in real-world code, leading to almost every single implementation on the planet being vulnerable to a catastrophic Key Reuse attack that can lead to full decryption and forgery of WiFi traffic.

A smaller issue is a recent parsing bug in CompCert, a formally verified C compiler released in 2008. The bug is an amateur scoping issue, where a variable declared in the scope of a for loop clobbers a global variable with the same name.

Although the CompCert paper explicitly says that the parsing step, as well as the assembling/linking steps, are not verified, this kind of bug is a huge blow to the credibility of formal methods. It really makes you wonder what sort of bugs formal verification is supposed to catch.

Is there hope?

Formal verification may not totally be wishful thinking. I’m hanging onto the hope that there is a place for it, and there’s some evidence to support this.

In the empirical study, no bugs were found in the implementation of complex and error-prone distributed protocols (Paxos, RAFT). This shows that verification can be applied to increase reliability. And John Regehr’s compiler bug-hunting paper reported that CompCert did not have any runtime wrong-code errors, which were found in 10 other compilers.

But the guarantees that we expect are much stronger than a correct protocol, handshake, or compiler optimization. We need the entire system to be reliable, and we need proof effort to be minimal for widespread adoption to be feasible.

The bottom line is that formal methods will languish in academic circles, unable to bridge the gap with the real, nonsensical world, for a long time to come.